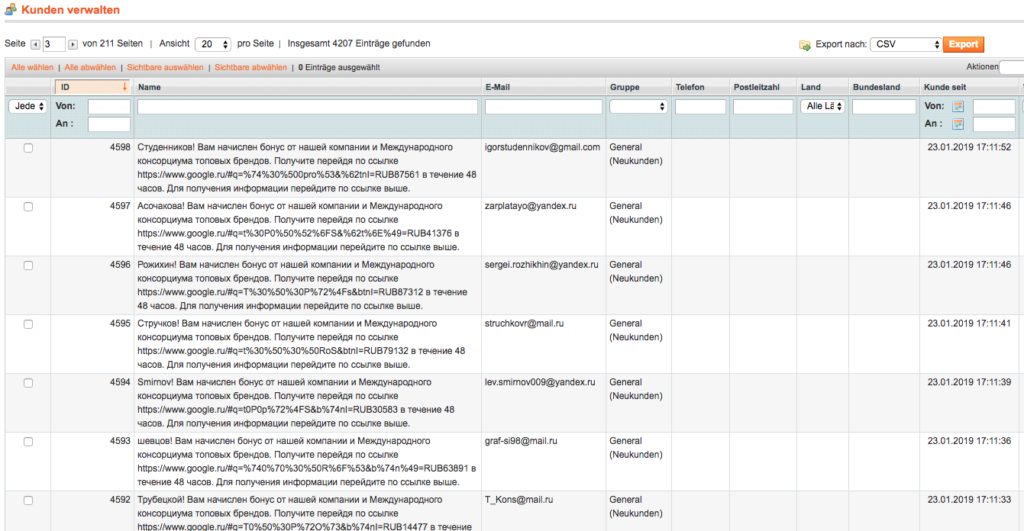

Today i had the issue that after dumping and importing a Magento Database from one instance of MySQL into another one the order dates got transported 2 hours into the future. How come?!?

Investigation and testing showed that the source database was running with timezone „GMT“ aka „UTC“ aka +00. The target database was running in system timezone which was CEST aka „Europe/Berlin“ aka +02. Still no problem in sight as Magento anyway stores all values in timezone „GMT“!

Turns out Magento uses data type timestamp for order dates etc. MySQL documentation states:

MySQL converts

https://dev.mysql.com/doc/refman/5.7/en/datetime.htmlTIMESTAMPvalues from the current time zone to UTC for storage, and back from UTC to the current time zone for retrieval. (This does not occur for other types such asDATETIME.) By default, the current time zone for each connection is the server’s time. The time zone can be set on a per-connection basis. As long as the time zone setting remains constant, you get back the same value you store. If you store aTIMESTAMPvalue, and then change the time zone and retrieve the value, the retrieved value is different from the value you stored. This occurs because the same time zone was not used for conversion in both directions. The current time zone is available as the value of thetime_zonesystem variable. For more information, see Section 5.1.13, “MySQL Server Time Zone Support”.

Exactly this happened: If you store a TIMESTAMP value, and then change the time zone and retrieve the value, the retrieved value is different from the value you stored.

What was the solution? Well first i wanted to set the target timezone in the dump file. This way target MySQL would convert into GMT during importing and back when the shop reads values. This works except that all values get converted into summer time as it is summer now.

Alternative and better solution: Just set the timezone for each of the shop’s connections by adding the follwing in app/etc/local.xml:

<initStatements><![CDATA[SET NAMES utf8;SET time_zone = '+00:00';]]></initStatements>This way MySQL doesn’t convert timestamp values in those connections and Magento gets GMT as expected from the database.

So in my view there are some interesting observations:

- Why does Magento 1 by design a double conversion of date values by storing them as timestamp in MySQL (except when the server’s system timezone is GMT)?

- Magento creates GMT dates

- storing such a value in MySQL interprets it as in the connections timezone and transforms it into GMT for storage.

- reading is done vice versa: read as GMT and convert into connections timezone

datetimewould be a more fitting data type in my view- Magento 2 consequently fixes this by setting in

web/vendor/magento/framework/DB/Adapter/Pdo/Mysql.php:

$this->_connection->query("SET time_zone = '+00:00'");- MySQL automagically converts timestamps with the correct timezone offset valid for the indivdiual timestamp in the connection’s timezone